Below scenario explains the troubleshooting steps to get the EC2 recovered from EBS boot failiures.

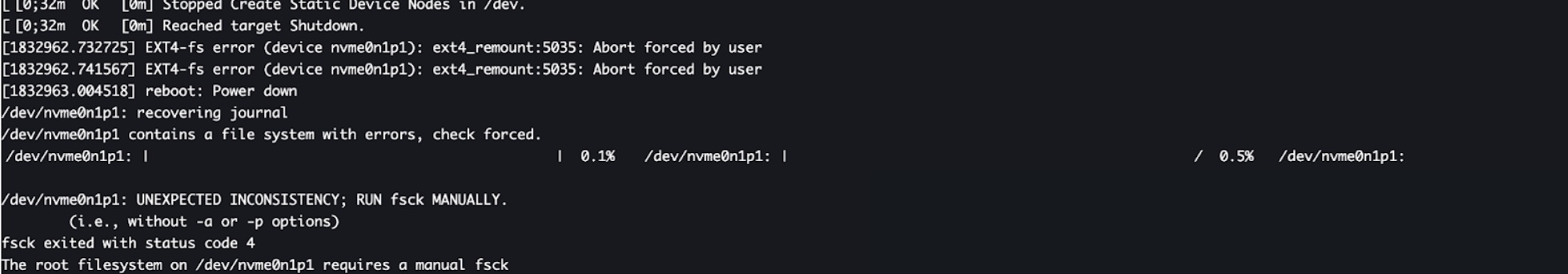

Issue: System root has failed consistency check and instance was no longer able to boot.

Procedure to be followed for bringing the EC2 instance back.

-

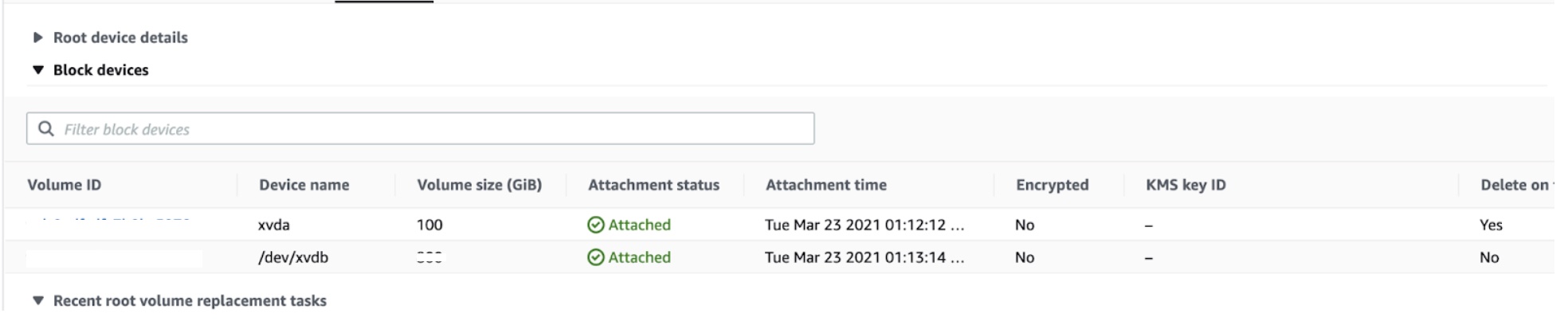

Capture the current EBS volume metadata from AWS console,especially the device mount name.

-

Stop the EC2 instance which needs to be troubleshooted.

-

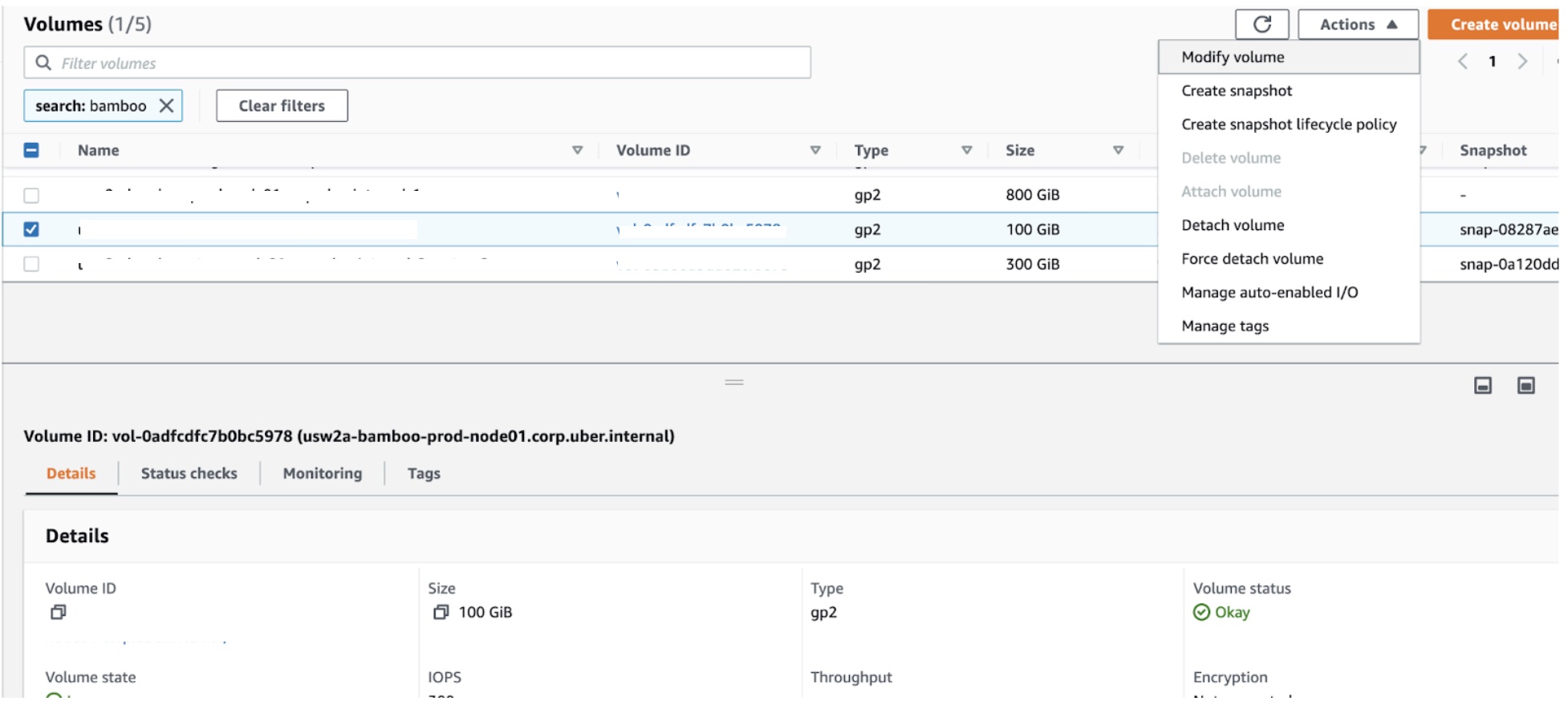

Detach the volume which has to be fixed from the AWS management console.

-

Use the same console to attach the volume to the rescue machine as a secondary device (/dev/sdf).

-

Check if the disk available on the rescue EC2 instance using

lsblk

- Run the fsck on the drive to fix the errors.

fsck <mount>

Ex: fsck /dev/nvme2n1

- If you see an error with the above command, run fsck directly.

fsck

-

Above commands can be run with -N flag as a dry run to see the changes to be made.

-

Once the file errors are fixed,detach the EBS volume from the rescue EC2 instance and attach to the instance which has been taken down for maintenance.

-

Start the EC2 instance and check the system log.

-

If the process is successful we should be able to boot into the instance and the services would be running again.

This url has additional troubleshooting for EBS volumes.