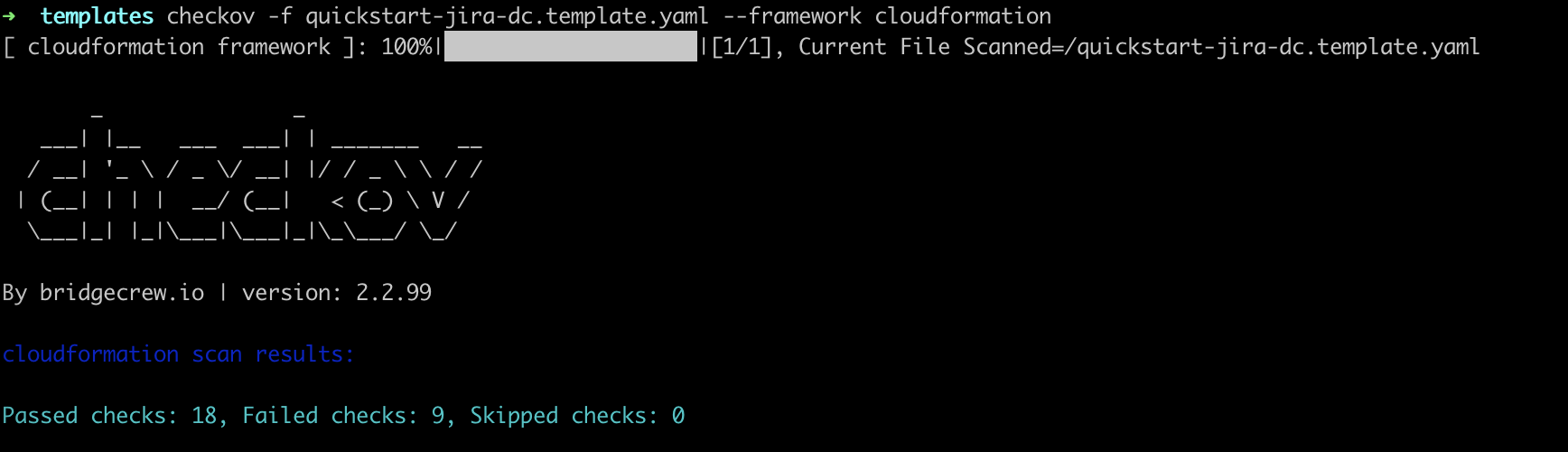

If your infrastructure has been deployed and maintained as code, chances are that you might be using Terraform or CloudFormation.In those cases most probably the enforcement of policies is after thought or takes backseat.

Read More

If your infrastructure has been deployed and maintained as code, chances are that you might be using Terraform or CloudFormation.In those cases most probably the enforcement of policies is after thought or takes backseat.

Read More

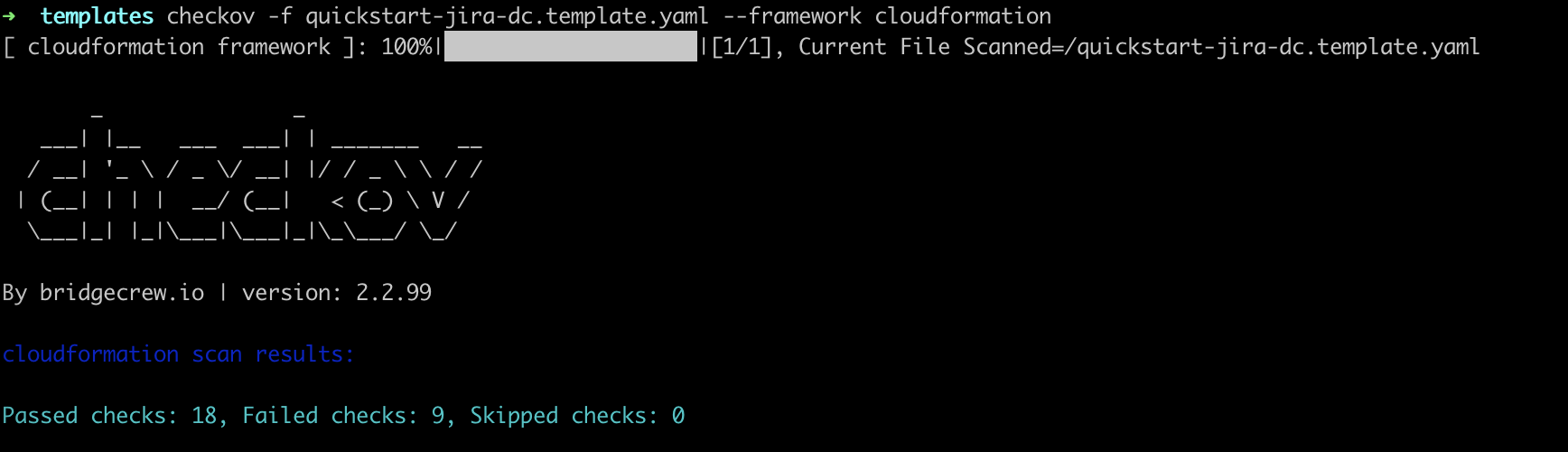

If you are handling your infrastructure as code using CloudFormation stacks and is managed by multiple users, you could have run into a scenario where one of them might have run the stack or modified the stack and no one is aware of change. To track and get alert on those scenarios we now have support for CloudFormation events in the EventBridge which can be piped to SNS or custom alert system.

Read More

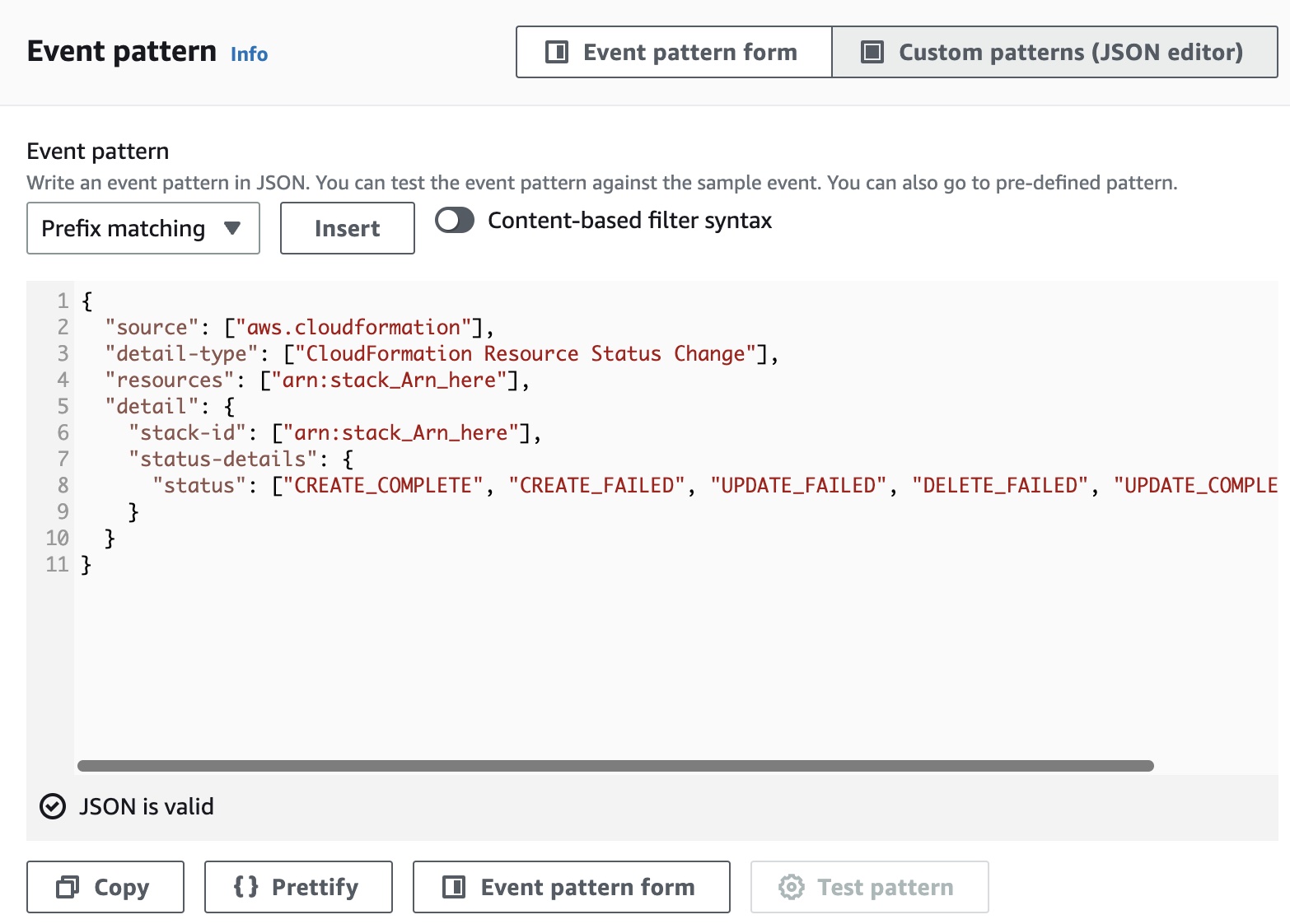

AWS EC2 Classic have already been retired and the official deadline for migration is coming up.

Read More

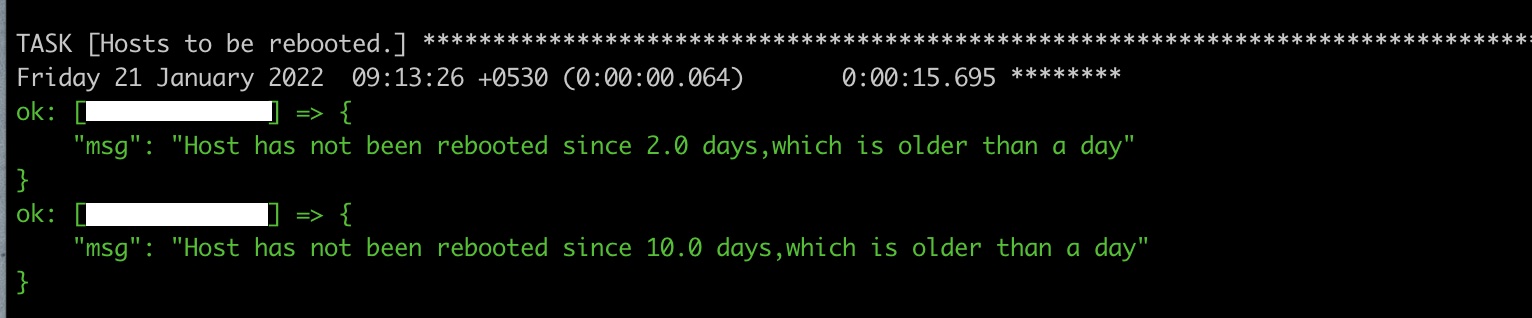

While working with EC2 Linux instances there could be a requirement to get the list of all the machines which had not been rebooted over months or for a particular number of days.The reason could be for audit or to the check the status of instances.

Read More

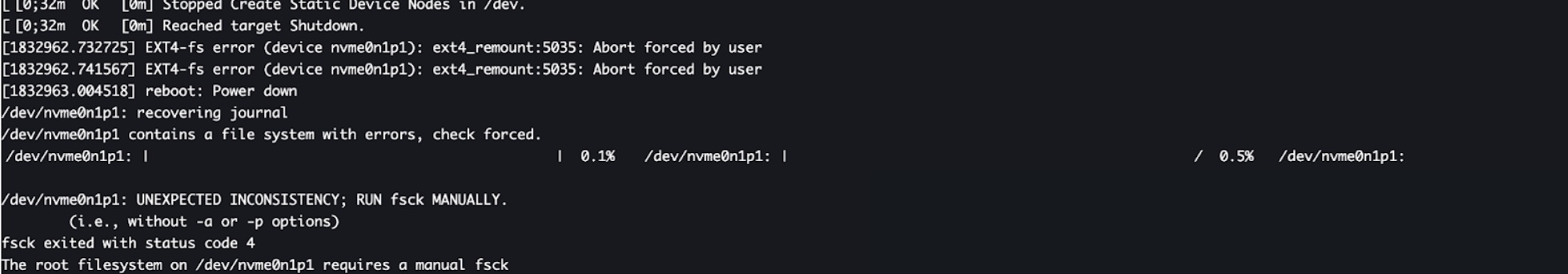

Below scenario explains the troubleshooting steps to get the EC2 recovered from EBS boot failiures.

Read More

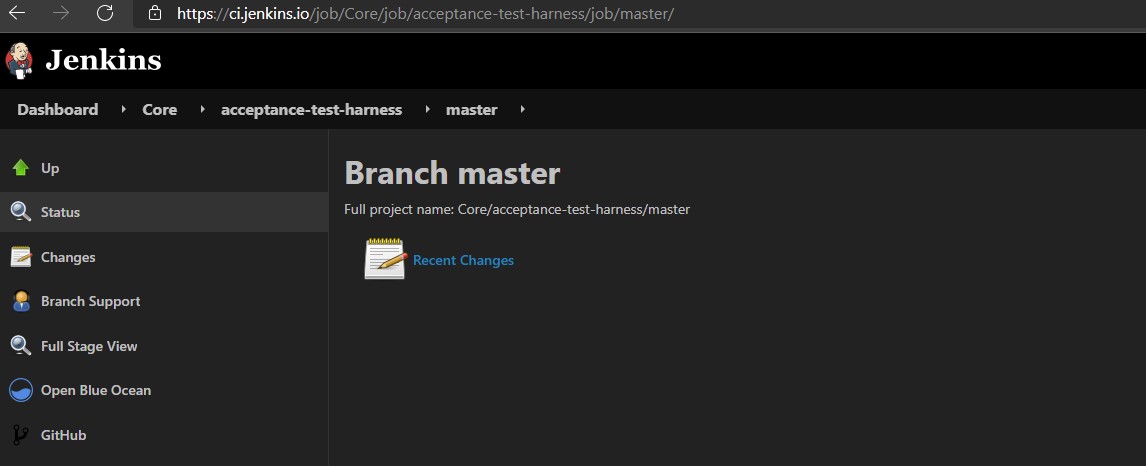

While working with Jenkins,there was a requirement to collect details of the Jobs/builds that had been run on the Server and derive statistics like

Read More

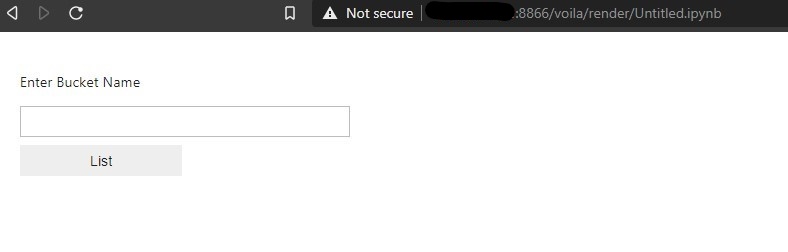

Scenario: As a continuation from previous post here where users have to access a Jupyter Notebook to browse the files,we now have to create a website/webpage to have seamless experience hiding the code and notebook interface.

Read More

Scenario: Users have to access and download files from a S3 bucket but not upload or change the contents of the same.

Read More

People using EC2 backed ECS cluster can understand the pain which goes in to maintaining and patching the base machines running our ECS containers.We usually pick two paths

Read MoreWe’ll provide a high-level overview of three common blueprints here. We start with the blueprint for modern business intelligence, which focuses on cloud-native data warehouses and analytics use cases. In the second blueprint, we look at multimodal data processing, covering both analytic and operational use cases built around the data lake. In the final blueprint, we zoom into operational systems and the emerging components of the AI and ML stack.

Interesting post on how the architectures are going to evolve

Step functions are very useful, if you are planning to build a workflow across multiple AWS services. It connects different AWS resources and gives us control over the execution path based on predefined conditions and feedback.

Read More

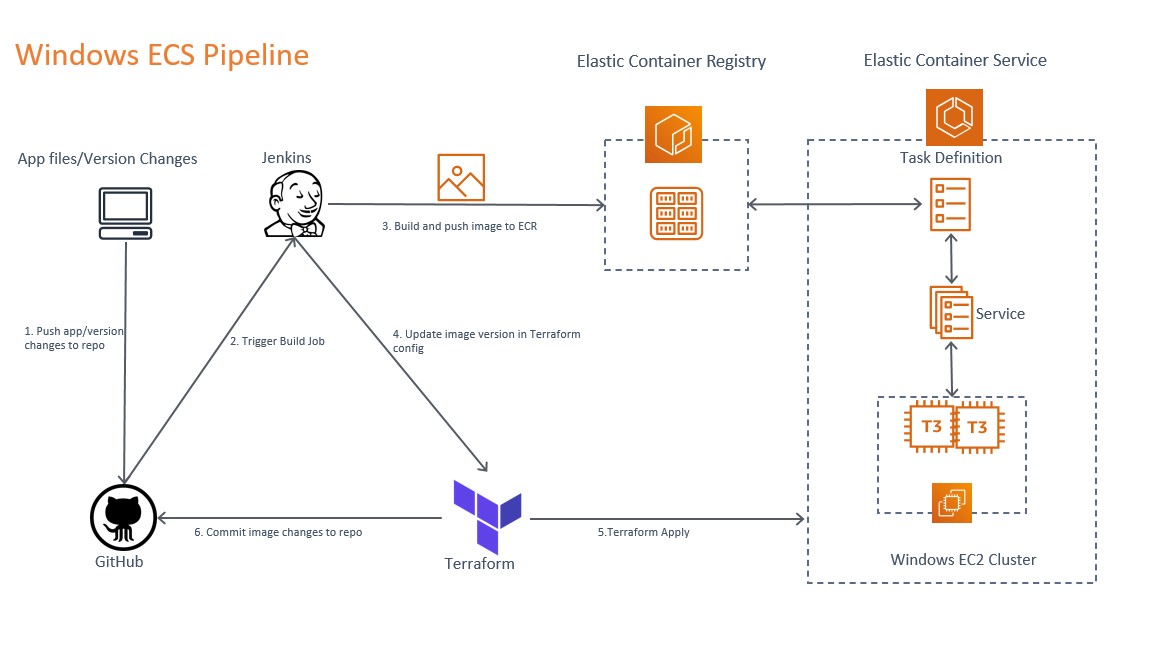

If you have used Windows container workloads on AWS as mentioned here,then you might be aware that implementing a code build and deployment pipeline is not a straight forward task.

Read More

Consider, we have deployed an infrastructure successfully using Terraform scripts.Now, you want to visualize your infrastructure and see the relation between resources created and how they are dependent on each other.

Read MoreMost of the time, what programming really is - is just a lot of trial and error. Debugging on the other hand is - in my opinion - an Art and becoming good at it takes time and experience - the more you know the libraries or framework you use, the easier it gets.

Different ways to debug your python code with logging.

To show off the versatility of RabbitMQ, we are going to use three case studies that demonstrate how RabbitMQ is well suited as a black-box managed service approach, as one that integrates tightly with the application enabling a well-functioning micro-service architecture, or as a gateway to other legacy project

Good write up on introducing RabbitMQ and it’s use cases

If you are using Terraform to deploy the infrastructure in AWS and if it includes auto scaling groups and policies set on EC2 machines and ECS services,you have to prevent resetting the Desired Count of the scaled up services on every IAC deployment.

Read MoreService mesh is a blazing hot topic in software engineering right now and rightfully so. I think it’s extremely promising technology and I’d love to see it widely adopted (in cases where it truly makes sense). Yet it remains shrouded in mystery for many people and even those who are familiar with it have trouble articulating what it’s good for and what it even is (like yours truly)

Good description of all the use cases for the service mesh

You shouldn’t pick up a given technology and integrate it with your stack just because it’s the trendy thing. We didn’t jump into the cloud 10 years ago just because it was cool, it felt like we were comparatively late to adopt Kubernetes, we don’t use a service mesh. We’ve let these technologies mature, and now we’re getting real value.

Nice read,gives insight in to the architecture decisions behind hey.com

Building automated deployment safety into the release process by using extensive pre-production testing, automatic rollbacks, and staggered production deployments lets us minimize the potential impact on production caused by deployments. This means that developers don’t need to actively watch deployments to production.

Extensive blog post on deployment and release methodologies followed at AWS, worth reading for anyone working on CI/CD implementations.

Telegram can be used for chatops using the extensive Bot API provided.Its user base,availability of mobile and web app support makes it good candidate for personal and small business use,if not enterprise.

Read MoreLinting your code, checking for security, compliance and validity will help you improve your Terraform code quality very easily from hundreds of different resources and cloud providers.

Different ways and tools to maintain your Terraform code quality

The simple answer is that different databases support different use cases. After all, “every AWS product is for somebody, and no AWS product is for everybody.”

As name says , quick safari of all the exclusive database services offered by AWS

Serverless is an excellent choice for building modern applications. But Containers bring a lot to the table as well. The flexibility of a Container architecture should not be underestimated, especially when it comes to solving complex problems.

This article can serve as a starting point while deciding your new cloud architecture

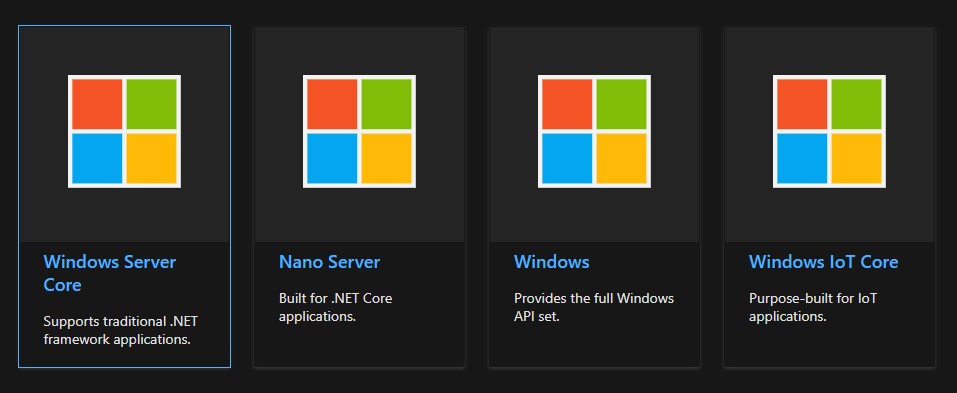

You have a Windows Legacy application or compatible version which needs to be part of digital transformation and you cannot afford rewriting the same.So you are left with limited options.

Read MoreIf/when there are significant gains to be had by our engineers by offering Kubernetes (or any other container orchestration platform) we will explore offering them. At this time there is not a significant gain to be had by offering Kubernetes

Kubernetes is not always a best solution for container work loads…

That’s why at the end of the article I can only repeat the trivial: always try to understand what’s going on, always look for practical benefits, rather than catchy words, and always try stay in the middle.

Long and interesting post on Nocode vs Coding for building workflows.Also it has many tools which can used instead of coding one yourself.

Before Capacity Providers, we had to make sure that the resources are available up and running before the task could be run.This constraint some times left us with unused capacity or uneven scaling of EC2 resources inside the cluster.

Read MoreWe settled on shipping a wrapper on top of Git that would automatically tweak configs and enable fsmonitor for developers (if it wasn’t already turned on) only for a whitelisted set of repositories

Dropbox explains how they improved git performance with fsmonitor

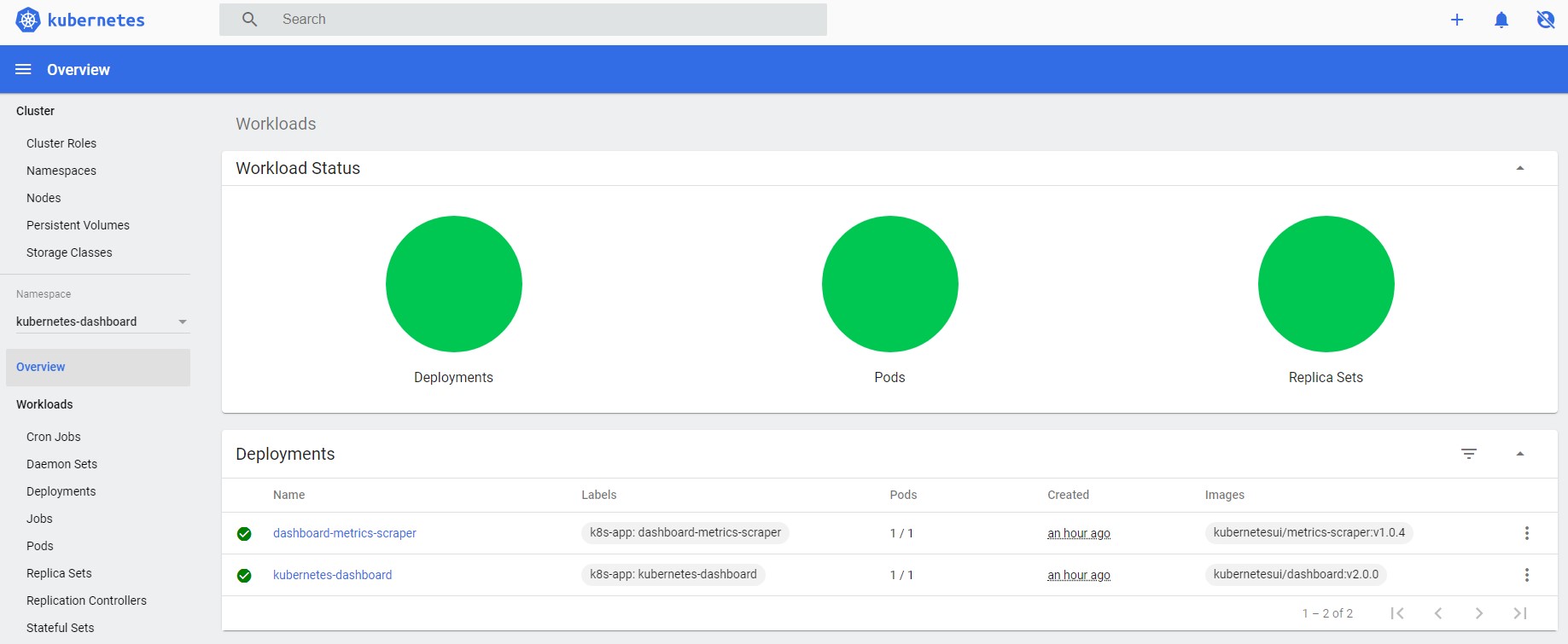

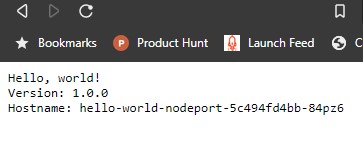

I hope you have built your Kubernetes test cluster using the steps mentioned in the first part Simplest cluster setup for learning Kubernetes.We will now add Dashboard to our cluster which helps us to manage using user interface and have minimal monitoring capacity.

Read MoreAs such, it provides a solid foundation on which to support the other three capabilities of a cloud-native platform: progressive delivery, edge management, and observability. These capabilities can be provided, respectively, with the following technologies: continuous delivery pipelines, an edge stack, and an observability stack.

Nice article to read before planning Kubernetes based architectures,with details on tools,processes etc

I am sure you have been seeing the Kubernetes widely used in technology circles and wanted to explore yourself.But you are overwhelmed with the amount of resources available on the internet, the complexity involved in learning might make you loose interest or hesitant in trying the things out.

Read MoreMy favorite part was Heroku’s use of industry standards like Docker and Git to manage their pipelines and deployments which made moving my application to their platform nearly an effortless exercise

Interesting post about replicating custom kubernetes based architecture on Heroku PaaS service

If you are using a S3 backend to save the state and lock the changes,something like here.Chances are you might see errors rarely while initiating backend,this could happen when

Read MoreHaving a central artifact store enables tighter artifact governance and improves security visibility. This post uses some of these patterns to show you how to integrate AWS CodeArtifact in an effective, cost-controlled, and efficient manner.

JFrog Artifactory alternative from AWS.

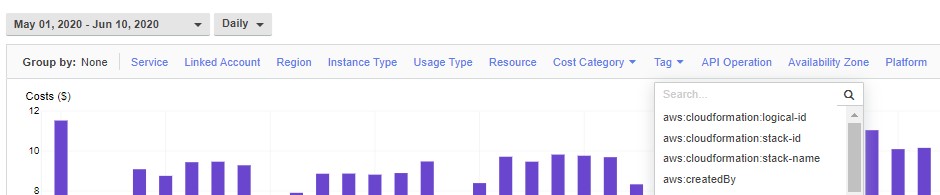

Consider all your workload is running inside ECS of your AWS account and you received a bill by the end of the month.

Read MoreAutoscaling resources on ECS is simple but optimizing it for the cost and capacity becomes a difficult task once the scale of workload increases.

Read More

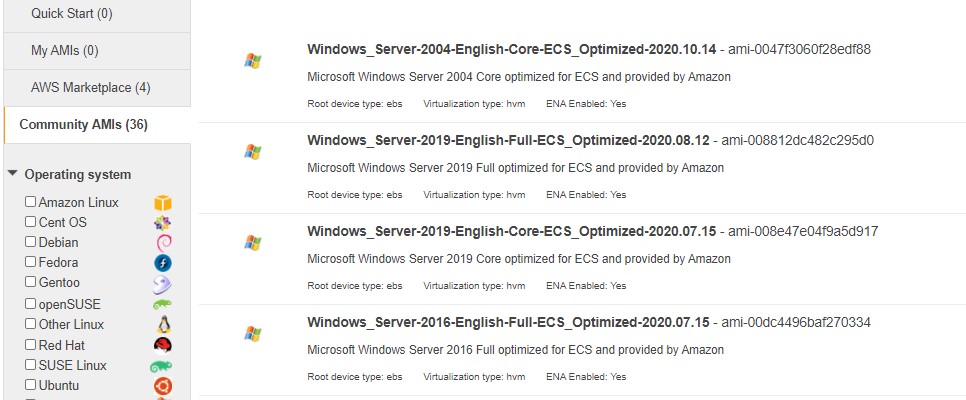

If you already have or planning to use Windows workloads on Amazon ECS, you should be aware that it is not as feature complete as Linux counterpart.

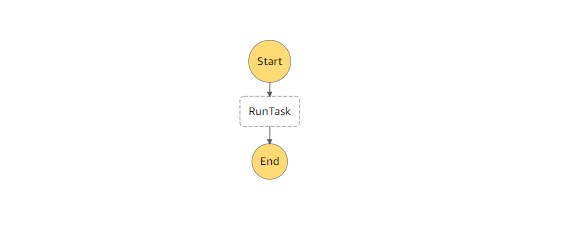

Read MoreRun scheduled tasks resiliently with the help of a CloudWatch Events Rule, a Step Functions state machine, and Fargate

Better approach for running tasks on ECS

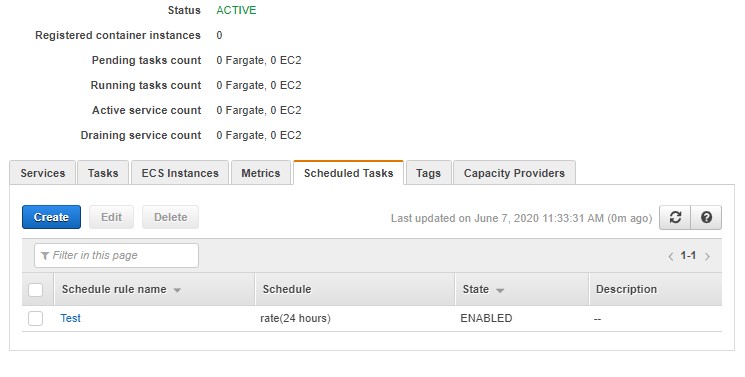

We have a scenario where we just need to run a task on scheduled time of a day or repeat every few hours inside AWS.

Read More

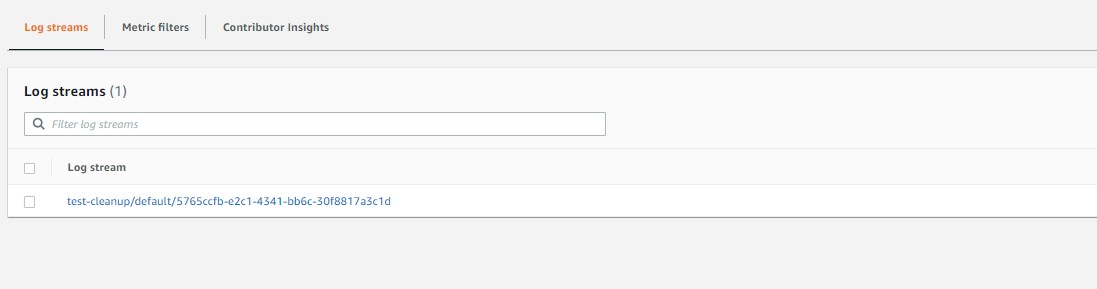

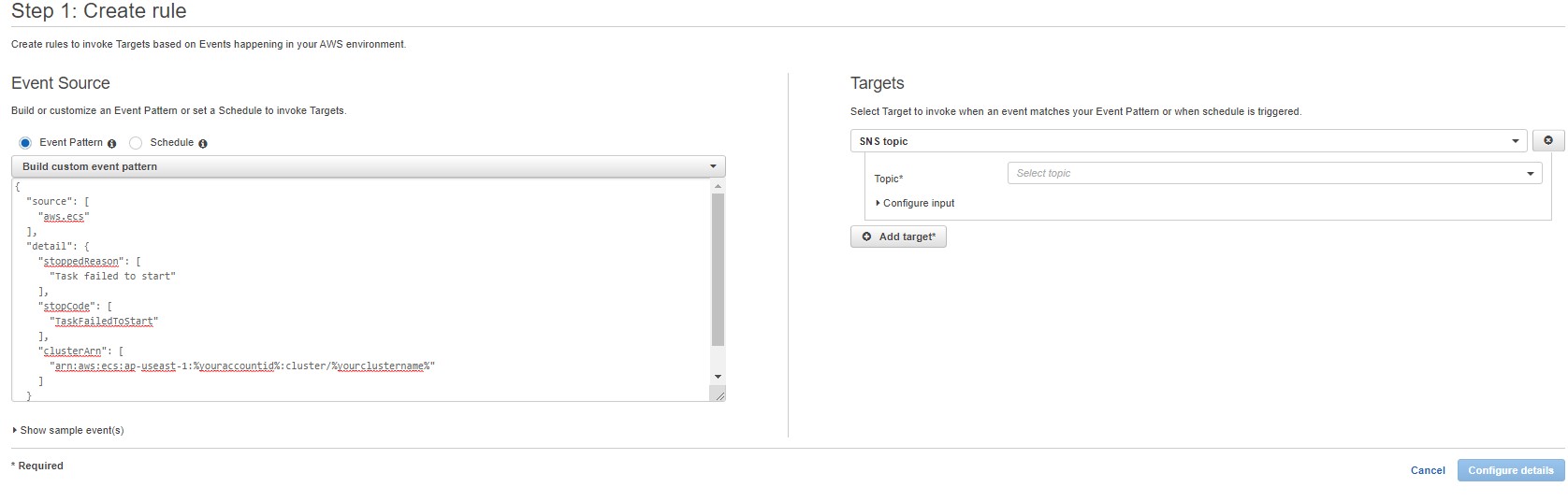

Consider a use case where a ECS task could not be placed or started on a cluster and fails silently.

Read MoreUseful resource for both interviewer and interviewee..

We might come across a scenario where we need to deploy resources in multiple AWS regions as a disastor recovery or backup plan.

Using Terraform for infrastructure as code we can achieve this using alias in Provider.

Declare the provider with primary region as usual.

provider "aws" {

version = "~> 2.0"

region = us-east-1

profile = "default"

}Declare the second provider with alias which can be used while creating resources.

provider "aws" {

alias = "backup"

region = ap-southeast-1

}Now we can proceed using the alias to create resources in primary and backup regions in our case its us-east-1 and ap-southeast-1

provider "aws" {

version = "~> 2.0"

region = us-east-1

profile = "default"

}

provider "aws" {

alias = "backup"

region = ap-southeast-1

}

resource "aws_instance" "Primary-EC2" {

ami = var.ami

instance_type = "t2.micro"

tags = {

Name = "Primary-EC2"

}

}

resource "aws_instance" "Secondary-EC2" {

provider = aws.backup

ami = var.ami_backup

instance_type = "t2.micro"

tags = {

Name = "Secondary-EC2"

}

}

variable "ami" {

default = "ami-01d025118d8e760db"

}

variable "ami_backup" {

default = "ami-0fe1ff5007e7820fd"

}Apply the terraform script to have EC2 machines created in two regions.

Read MoreThis is simple and most often googled thing on web,creating a backend for Terraform for deployments in AWS cloud with a provision for locking the state file.

Read MoreNice and long article on different branching strategies and source management techniques

One word description of all AWS services

Created a place to write about the things i liked, used and found across the world of web.Every day spent there is an excursion in life, enjoying, learning new things in life on the way.

Read MoreCreated a place to write about the things i liked, used and found across the world of web.Every day spent there is an excursion in life, enjoying, learning new things in life on the way.

Read MoreIf your infrastructure has been deployed and maintained as code, chances are that you might be using Terraform or CloudFormation.In those cases most probably the enforcement of policies is after thought or takes backseat.

Read MoreIf you are handling your infrastructure as code using CloudFormation stacks and is managed by multiple users, you could have run into a scenario where one of them might have run the stack or modified the stack and no one is aware of change. To track and get alert on those scenarios we now have support for CloudFormation events in the EventBridge which can be piped to SNS or custom alert system.

Read MoreWhile working with EC2 Linux instances there could be a requirement to get the list of all the machines which had not been rebooted over months or for a particular number of days.The reason could be for audit or to the check the status of instances.

Read MoreConsider, we have deployed an infrastructure successfully using Terraform scripts.Now, you want to visualize your infrastructure and see the relation between resources created and how they are dependent on each other.

Read MoreIf you are using Terraform to deploy the infrastructure in AWS and if it includes auto scaling groups and policies set on EC2 machines and ECS services,you have to prevent resetting the Desired Count of the scaled up services on every IAC deployment.

Read MoreExternal LinkLinting your code, checking for security, compliance and validity will help you improve your Terraform code quality very easily from hundreds of different resources and cloud providers.

If you are using a S3 backend to save the state and lock the changes,something like here.Chances are you might see errors rarely while initiating backend,this could happen when

Read MoreWe might come across a scenario where we need to deploy resources in multiple AWS regions as a disastor recovery or backup plan.

Using Terraform for infrastructure as code we can achieve this using alias in Provider.

Declare the provider with primary region as usual.

provider "aws" {

version = "~> 2.0"

region = us-east-1

profile = "default"

}Declare the second provider with alias which can be used while creating resources.

provider "aws" {

alias = "backup"

region = ap-southeast-1

}Now we can proceed using the alias to create resources in primary and backup regions in our case its us-east-1 and ap-southeast-1

provider "aws" {

version = "~> 2.0"

region = us-east-1

profile = "default"

}

provider "aws" {

alias = "backup"

region = ap-southeast-1

}

resource "aws_instance" "Primary-EC2" {

ami = var.ami

instance_type = "t2.micro"

tags = {

Name = "Primary-EC2"

}

}

resource "aws_instance" "Secondary-EC2" {

provider = aws.backup

ami = var.ami_backup

instance_type = "t2.micro"

tags = {

Name = "Secondary-EC2"

}

}

variable "ami" {

default = "ami-01d025118d8e760db"

}

variable "ami_backup" {

default = "ami-0fe1ff5007e7820fd"

}Apply the terraform script to have EC2 machines created in two regions.

Read MoreThis is simple and most often googled thing on web,creating a backend for Terraform for deployments in AWS cloud with a provision for locking the state file.

Read MoreConsider a use case where a ECS task could not be placed or started on a cluster and fails silently.

Read MoreExternal LinkRun scheduled tasks resiliently with the help of a CloudWatch Events Rule, a Step Functions state machine, and Fargate

We have a scenario where we just need to run a task on scheduled time of a day or repeat every few hours inside AWS.

Read MoreIf you already have or planning to use Windows workloads on Amazon ECS, you should be aware that it is not as feature complete as Linux counterpart.

Read MoreAutoscaling resources on ECS is simple but optimizing it for the cost and capacity becomes a difficult task once the scale of workload increases.

Read MoreExternal LinkWe settled on shipping a wrapper on top of Git that would automatically tweak configs and enable fsmonitor for developers (if it wasn’t already turned on) only for a whitelisted set of repositories

Consider all your workload is running inside ECS of your AWS account and you received a bill by the end of the month.

Read MoreExternal LinkHaving a central artifact store enables tighter artifact governance and improves security visibility. This post uses some of these patterns to show you how to integrate AWS CodeArtifact in an effective, cost-controlled, and efficient manner.

External LinkWe’ll provide a high-level overview of three common blueprints here. We start with the blueprint for modern business intelligence, which focuses on cloud-native data warehouses and analytics use cases. In the second blueprint, we look at multimodal data processing, covering both analytic and operational use cases built around the data lake. In the final blueprint, we zoom into operational systems and the emerging components of the AI and ML stack.

External LinkService mesh is a blazing hot topic in software engineering right now and rightfully so. I think it’s extremely promising technology and I’d love to see it widely adopted (in cases where it truly makes sense). Yet it remains shrouded in mystery for many people and even those who are familiar with it have trouble articulating what it’s good for and what it even is (like yours truly)

External LinkYou shouldn’t pick up a given technology and integrate it with your stack just because it’s the trendy thing. We didn’t jump into the cloud 10 years ago just because it was cool, it felt like we were comparatively late to adopt Kubernetes, we don’t use a service mesh. We’ve let these technologies mature, and now we’re getting real value.

External LinkMy favorite part was Heroku’s use of industry standards like Docker and Git to manage their pipelines and deployments which made moving my application to their platform nearly an effortless exercise

If/when there are significant gains to be had by our engineers by offering Kubernetes (or any other container orchestration platform) we will explore offering them. At this time there is not a significant gain to be had by offering Kubernetes

Kubernetes is not always a best solution for container work loads…

External LinkI hope you have built your Kubernetes test cluster using the steps mentioned in the first part Simplest cluster setup for learning Kubernetes.We will now add Dashboard to our cluster which helps us to manage using user interface and have minimal monitoring capacity.

Read MoreExternal LinkAs such, it provides a solid foundation on which to support the other three capabilities of a cloud-native platform: progressive delivery, edge management, and observability. These capabilities can be provided, respectively, with the following technologies: continuous delivery pipelines, an edge stack, and an observability stack.

I am sure you have been seeing the Kubernetes widely used in technology circles and wanted to explore yourself.But you are overwhelmed with the amount of resources available on the internet, the complexity involved in learning might make you loose interest or hesitant in trying the things out.

Read MorePeople using EC2 backed ECS cluster can understand the pain which goes in to maintaining and patching the base machines running our ECS containers.We usually pick two paths

Read MoreStep functions are very useful, if you are planning to build a workflow across multiple AWS services. It connects different AWS resources and gives us control over the execution path based on predefined conditions and feedback.

Read MoreIf you have used Windows container workloads on AWS as mentioned here,then you might be aware that implementing a code build and deployment pipeline is not a straight forward task.

Read MoreYou have a Windows Legacy application or compatible version which needs to be part of digital transformation and you cannot afford rewriting the same.So you are left with limited options.

Read MoreBefore Capacity Providers, we had to make sure that the resources are available up and running before the task could be run.This constraint some times left us with unused capacity or uneven scaling of EC2 resources inside the cluster.

Read MoreThat’s why at the end of the article I can only repeat the trivial: always try to understand what’s going on, always look for practical benefits, rather than catchy words, and always try stay in the middle.

Long and interesting post on Nocode vs Coding for building workflows.Also it has many tools which can used instead of coding one yourself.

External LinkServerless is an excellent choice for building modern applications. But Containers bring a lot to the table as well. The flexibility of a Container architecture should not be underestimated, especially when it comes to solving complex problems.

This article can serve as a starting point while deciding your new cloud architecture

External LinkExternal LinkThe simple answer is that different databases support different use cases. After all, “every AWS product is for somebody, and no AWS product is for everybody.”

Telegram can be used for chatops using the extensive Bot API provided.Its user base,availability of mobile and web app support makes it good candidate for personal and small business use,if not enterprise.

Read MoreStep functions are very useful, if you are planning to build a workflow across multiple AWS services. It connects different AWS resources and gives us control over the execution path based on predefined conditions and feedback.

Read MoreIf you have used Windows container workloads on AWS as mentioned here,then you might be aware that implementing a code build and deployment pipeline is not a straight forward task.

Read MoreExternal LinkBuilding automated deployment safety into the release process by using extensive pre-production testing, automatic rollbacks, and staggered production deployments lets us minimize the potential impact on production caused by deployments. This means that developers don’t need to actively watch deployments to production.

External LinkTo show off the versatility of RabbitMQ, we are going to use three case studies that demonstrate how RabbitMQ is well suited as a black-box managed service approach, as one that integrates tightly with the application enabling a well-functioning micro-service architecture, or as a gateway to other legacy project

External LinkMost of the time, what programming really is - is just a lot of trial and error. Debugging on the other hand is - in my opinion - an Art and becoming good at it takes time and experience - the more you know the libraries or framework you use, the easier it gets.

Scenario: Users have to access and download files from a S3 bucket but not upload or change the contents of the same.

Read MoreScenario: As a continuation from previous post here where users have to access a Jupyter Notebook to browse the files,we now have to create a website/webpage to have seamless experience hiding the code and notebook interface.

Read MoreWhile working with Jenkins,there was a requirement to collect details of the Jobs/builds that had been run on the Server and derive statistics like

Read MoreAWS EC2 Classic have already been retired and the official deadline for migration is coming up.

Read MoreBelow scenario explains the troubleshooting steps to get the EC2 recovered from EBS boot failiures.

Read More